| iPUNet: Iterative Cross Field Guided Point Cloud Upsampling |

| Guangshun Wei1, Hao Pan2, Shaojie Zhuang1, Yuanfeng Zhou1, Changjian Li3 |

| 1Shandong University, 2Microsoft Research Asia, 3The University of Edinburgh |

| IEEE Visualization and Computer Graphics (TVCG) 2023 |

| |

|

| Abstract |

|

Point clouds acquired by 3D scanning devices are often sparse, noisy, and non-uniform, causing a loss of geometric features.

To facilitate the usability of point clouds in downstream applications, given such input, we present a learning-based point upsampling method, i.e., iPUNet, which generates dense and uniform points at arbitrary ratios and better captures sharp features.

To generate feature-aware points, we introduce cross fields that are aligned to sharp geometric features by self-supervision to guide point generation.

Given cross field defined frames, we enable arbitrary ratio upsampling by learning at each input point a local parameterized surface.

The learned surface consumes the neighboring points and 2D tangent plane coordinates as input, and maps onto a continuous surface in 3D where arbitrary ratios of output points can be sampled.

To solve the non-uniformity of input points, on top of the cross field guided upsampling, we further introduce an iterative strategy that refines the point distribution by moving sparse points onto the desired continuous 3D surface in each iteration.

Within only a few iterations, the sparse points are evenly distributed and their corresponding dense samples are more uniform and better capture geometric features.

Through extensive evaluations on diverse scans of objects and scenes, we demonstrate that iPUNet is robust to handle noisy and non-uniformly distributed inputs, and outperforms state-of-the-art point cloud upsampling methods.

|

|

|

Paper [PDF]

Data [Code and Data]

Citation:

Wei, Guangshun, Hao Pan, Shaojie Zhuang, Yuanfeng Zhou, and Changjian Li. "iPUNet: Iterative Cross Field Guided Point Cloud Upsampling." IEEE Transactions on Visualization and Computer Graphics (2023). (bibtex)

|

|

| |

| Algorithm pipeline |

|

|

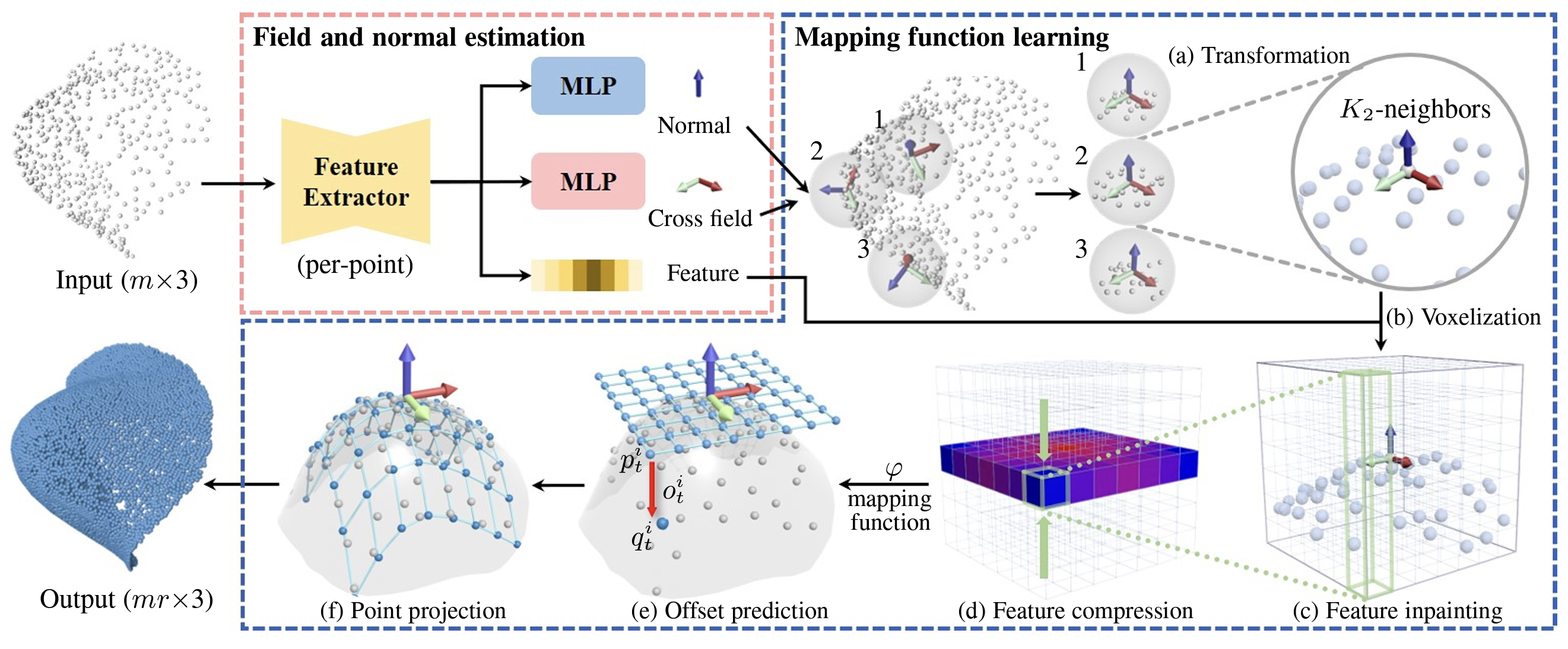

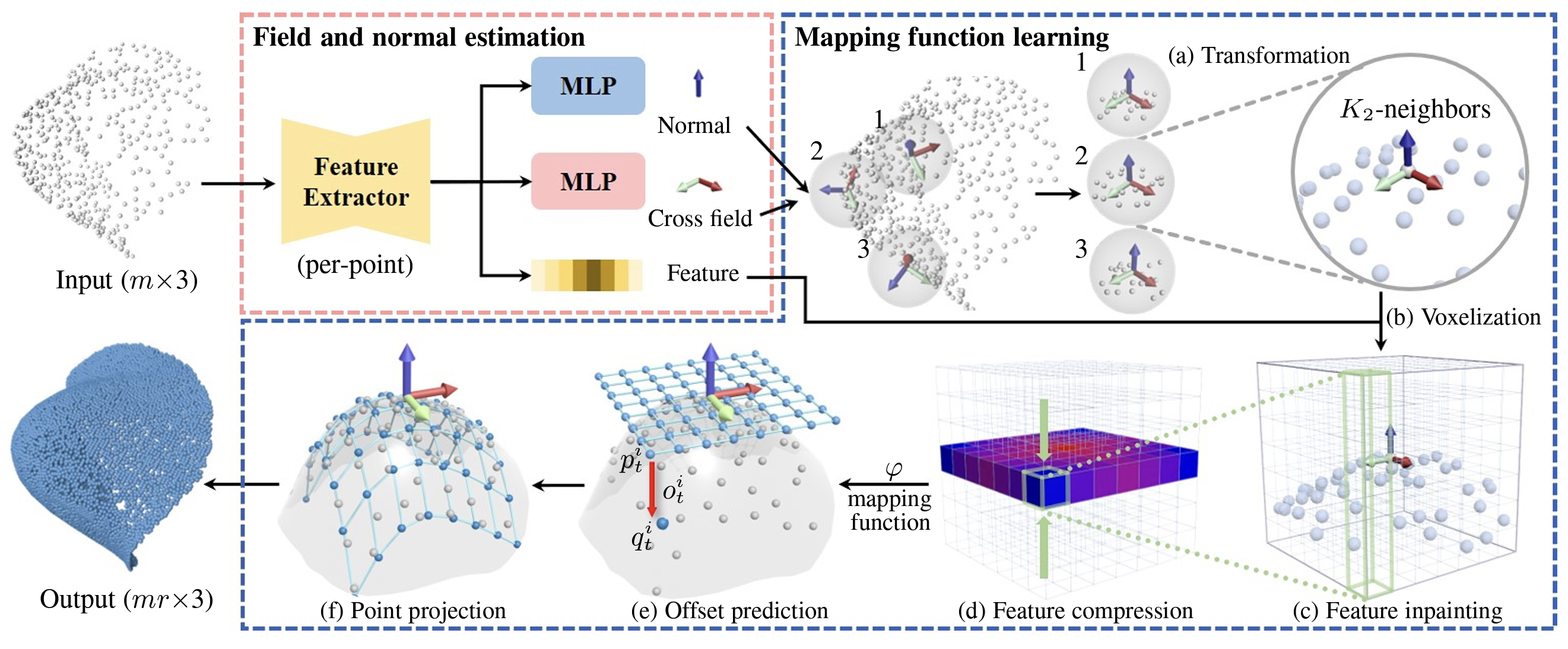

Fig. 1. Overview of our network.

Our iPUNet has two core components, i.e., field and normal estimation and mapping function learning.

In the first component, the per-point cross field, normal, and feature are estimated (Sec. 3.2), which form the basis for the later component.

Then, in the second component, given cross field defined frames, we learn a mapping function that maps any point on the tangent plane to the target shape (Sec. 3.3).

The learned mapping function enables us to upsample at arbitrary ratios.

|

| |

| Representative Results |

| Upsampling on General Shapes |

|

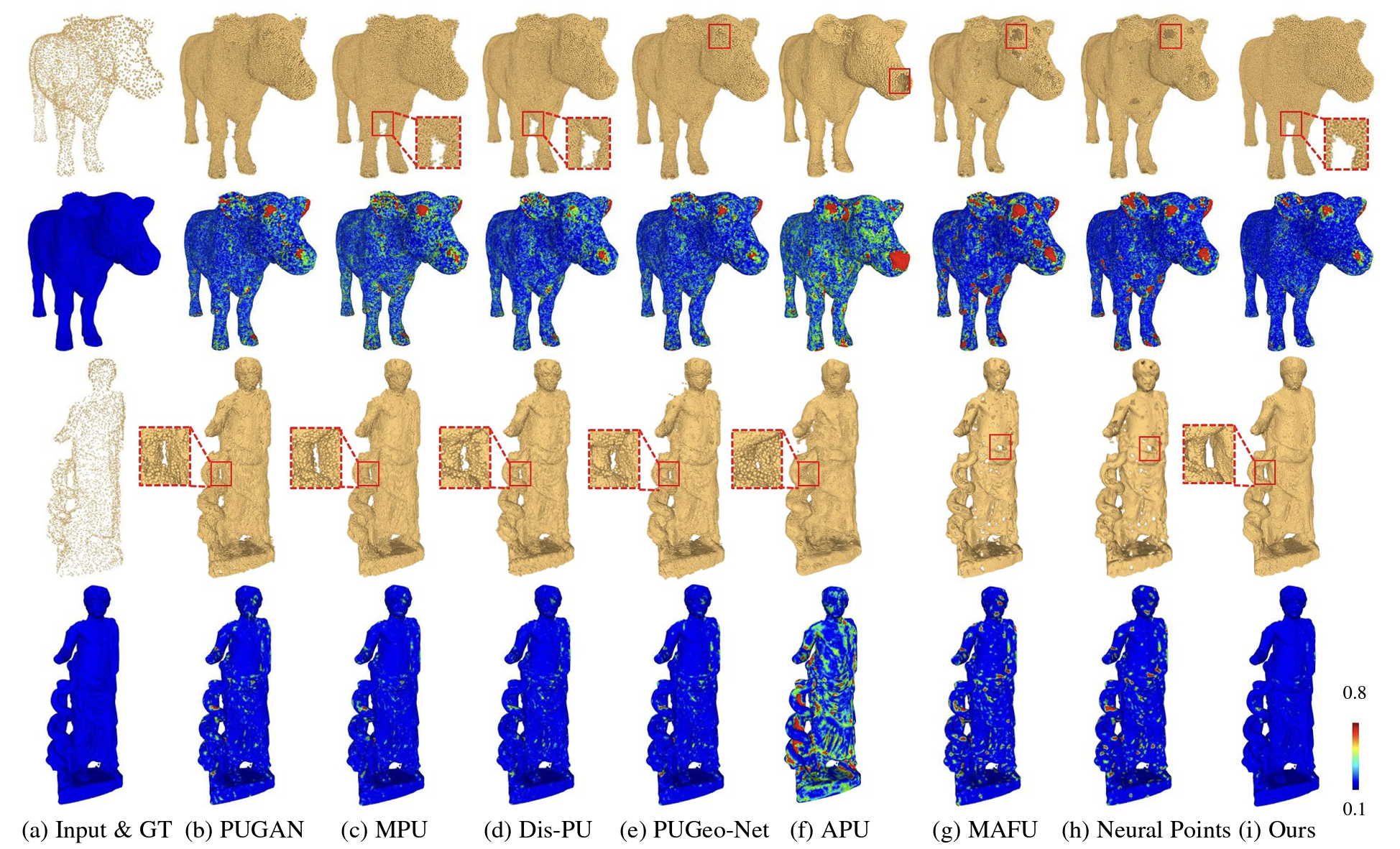

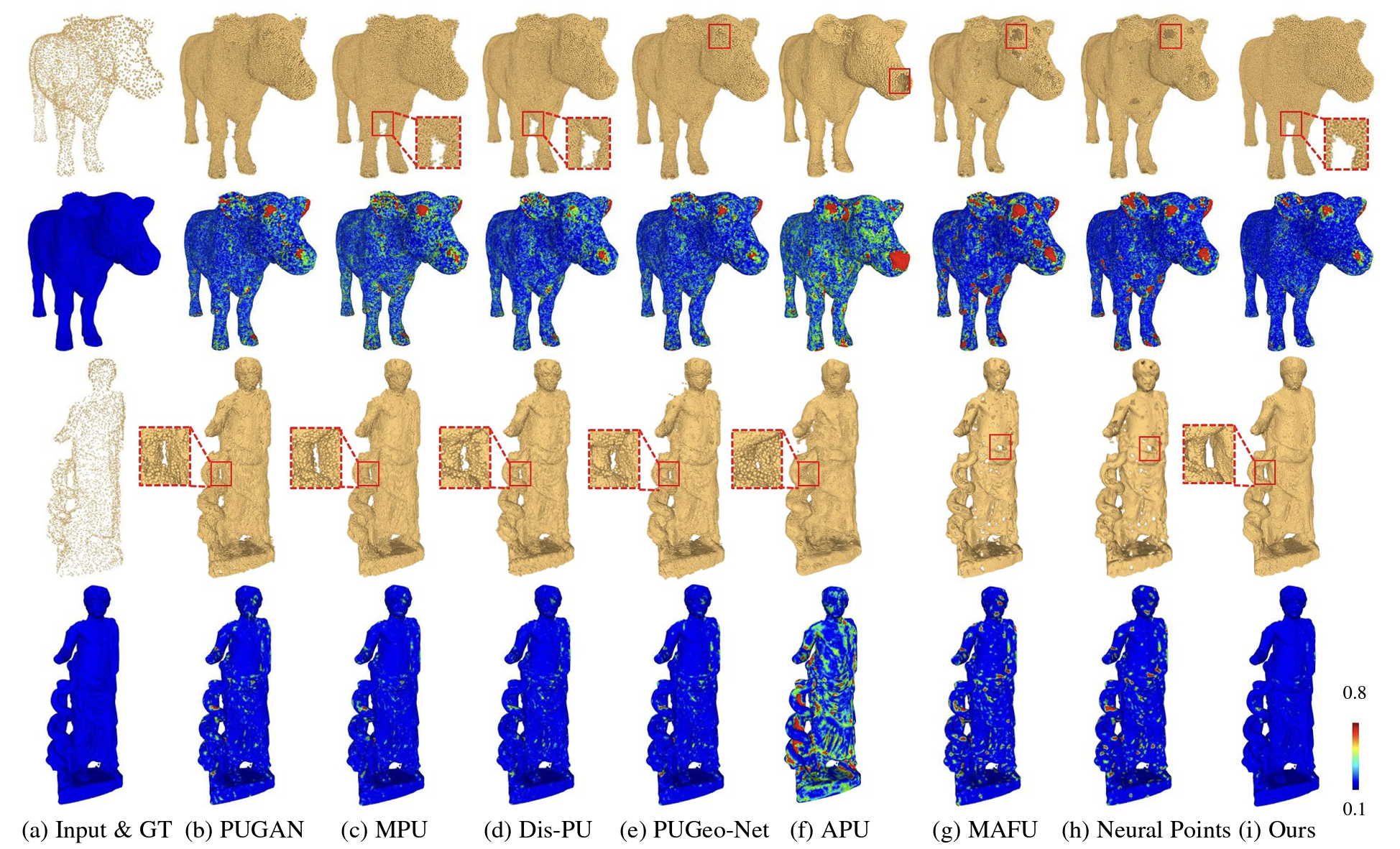

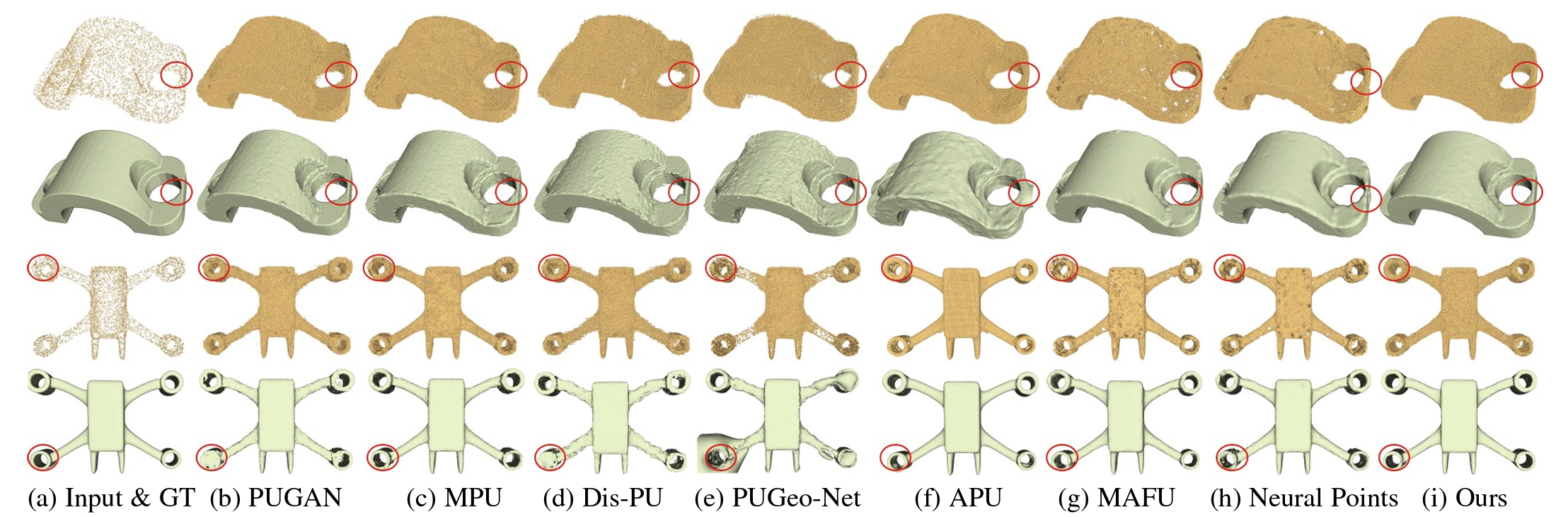

| Fig. 2. Visual Comparison with x16 Upsampling. Each model has two rows, the first row shows the upsampled results, while the second row shows the color-coding of the Chamfer distance from the ground truth to the upsampled points. The biggest error appears around the holes red, while the noises and outliers are usually depicted with green. Our results are closer to blue, as in the ground truth. |

| Upsampling on CAD Shapes |

|

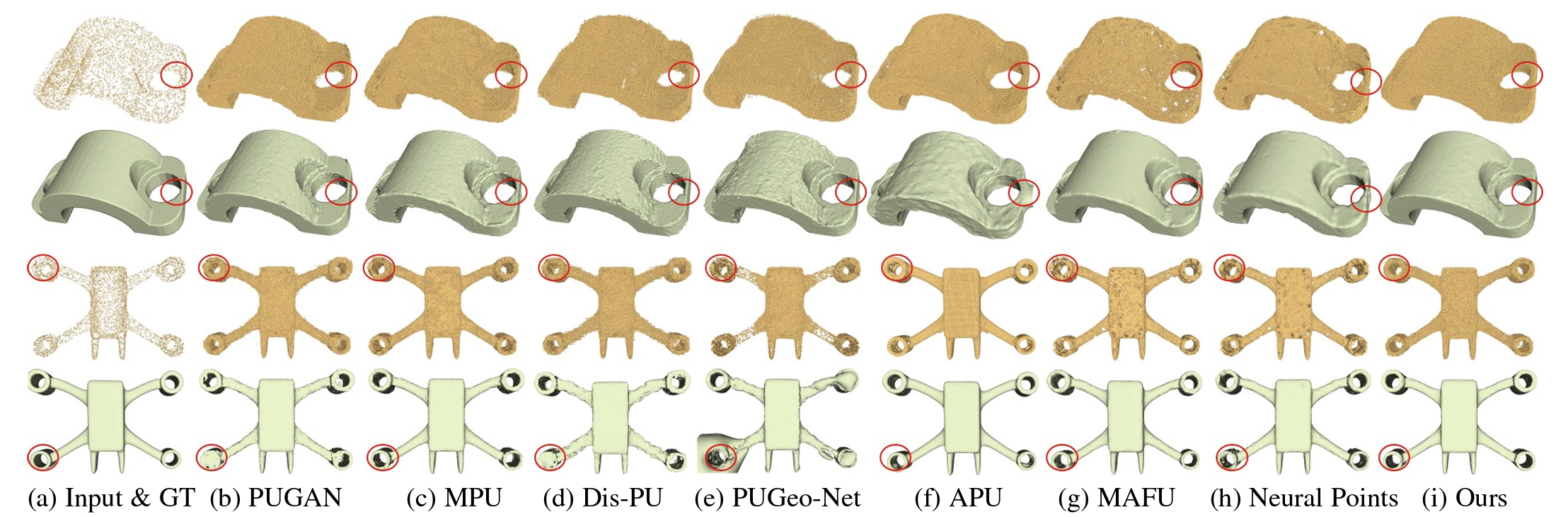

| Fig. 3. CAD Shape Upsampling (x16) and Reconstruction. For each example, we present the upsampled result in the first row, followed by the reconstructed surface in the second row. |

| Upsampling on Real Scans |

|

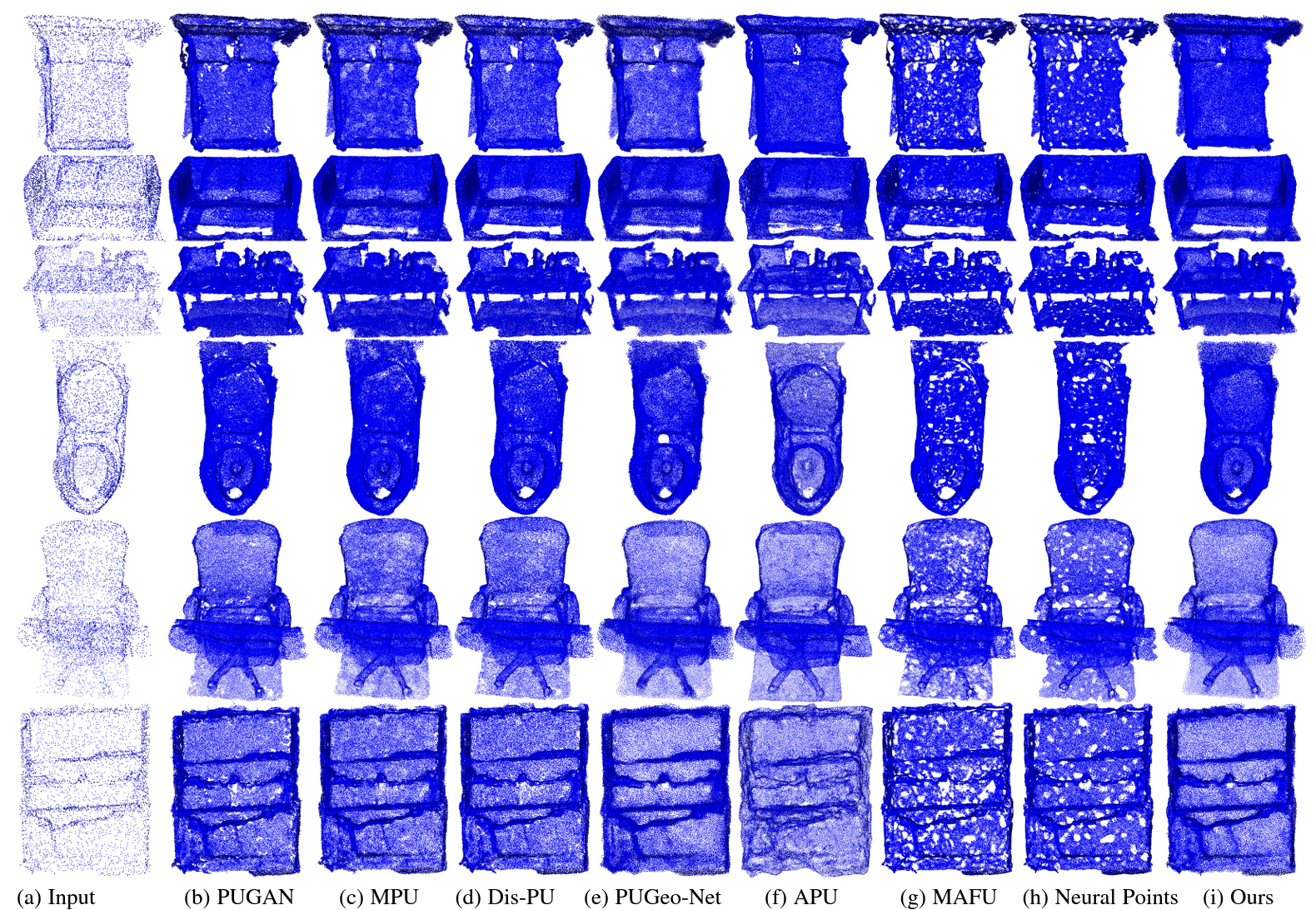

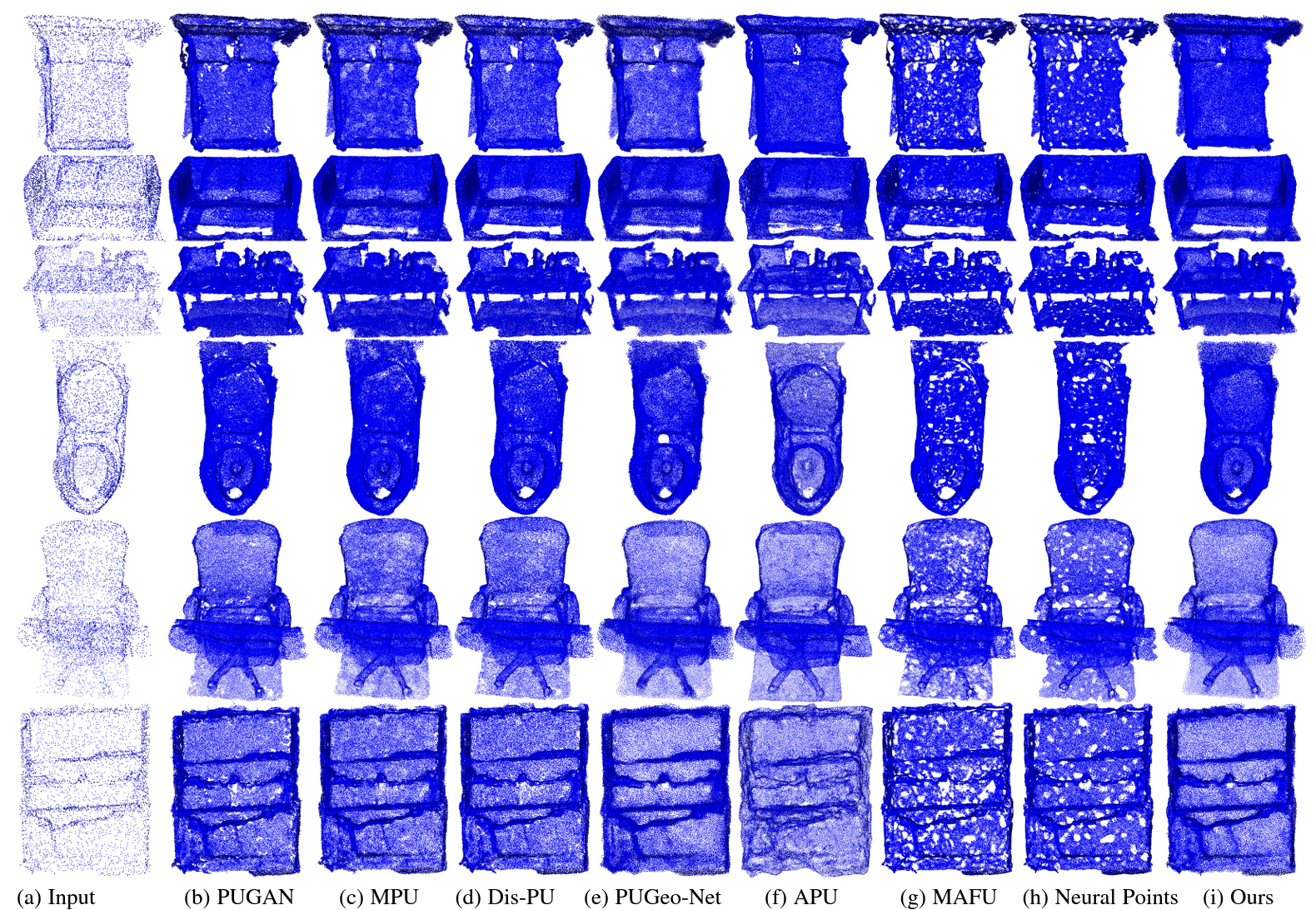

| Fig. 4. Upsampling (x16) on Real-world Scans. We compare our method with state-of-the-art methods on real-world scans proposed by [38]. Although our results are not perfect, rich geometric details can be seen and they are significantly superior to others. |

| |

| |

| ©Changjian Li. Last update: November 1, 2023. |